Email Brian

Brian's Website

Email Tom

Tom's Website

Email Brian Brian's Website |

|

Email Tom Tom's Website |

Go to the Table Of Contents

Did you read the Preface? Thanks!

Note

For this chapter, we had an exceptionally ... enthusiastic ... copy editor. There were lots and lots of changes to our original material, from section titles to command description quotes taken verbatim from man pages being edited for style and grammar. Argh. We've done our best to spruce this one up, but if you run into something really incongruous, then please let us know.

Now we embark on a trek through some of the most common user, or command line, commands. There are over 1,800 such commands available under an OpenLinux system with all packages installed. This chapter addresses a small fraction of that number. Here, we will show you how to get started, be functional, and learn more on your own.

There are many tasks you can accomplish with your Linux-based computer, either as a regular user or as root (superuser). Improper use of commands can create new and innovative failure modes that may require reinstalling your system software. If this happens, don't be discouraged. Making mistakes is part of the learning process.

Hint

Work under a normal user account, rather than as root, whenever possible. This small step protects you from many of the pitfalls that the new user may encounter. Another useful trick lies in remembering that Linux is case sensitive. Less and LESS and less are three distinct strings, only one of which is a program (the latter).

This chapter presents commands by function rather than in an alphabetical listing. For example, files, directories, links, and permissions are gathered together as related concepts and objects. With a few exceptions, commands are listed in this chapter with an introductory paragraph or two setting the context for the program, followed by a more formal listing in the following form:

Name - Brief command description

Usage : name required [optional...] [argument...]

|

|

option | variant |

Description of option |

This scheme is typically followed with one or more examples of command use, sample output, and additional commentary for clarification.

Many commands (programs) have short (-l) and long style (--listing) options. Either form is acceptable, although we recommend learning the short form for interactive use, and the long style in scripts (for purposes of self-documentation). Options can be concatenated, so "-s -i -v" is also typed on a command line as "-siv." The Vertical Bar '|' (known also by its Unix name, pipe) character is used in the option listings as it is in manpages, as a stand-in for the word "or." Some programs have many pages of options documented; for these we only highlight the more common ones. Detailed online information for most commands is available through the man and info resources, both discussed in the following section.

Lastly, we understand that some of the explanations and descriptions contained in the pages that follow occasionally raise as many questions as they answer. The most common question is about regular expressions (or regex's) -- what they are and how to use them. Regex patterns search for and select text and are used extensively in many Linux commands. The "Using Filter Commands" section of this chapter discusses the basic details of regular expressions.

In the each of the various commands, we provide a working context that lets you understand the current topic; you can explore the other resources to learn more about related concepts at some later time.

HELP! AAAARRRRGGGHHHH! This is the sound of a new Linux user who has typed rm -rf / home/bilbrey/temp (don't try this at home), while logged in as root. After reinstalling your Linux system, the first thing to do is search out resources about that "event" (putting it politely), in order to prevent its recurrence. In this section, we survey the various resources available to convey help, information, and digital sustenance to the user.

Note

As used previously, the rm command erases the entire contents of the "/" (or root) file system. That accidental space between the first forward slash and home/bilbrey/temp is the culprit. Without the space, it merely deletes the /home/bilbrey/temp directory and all it's contents.

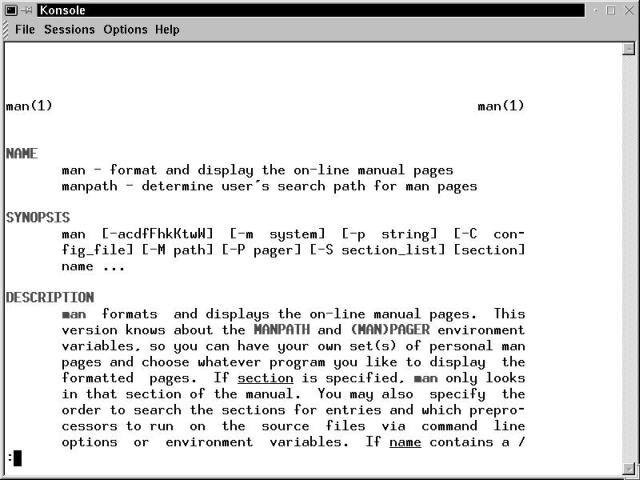

man commandThe man command - used to format and display online manual pages - is the single most useful command for the new and intermediate Linux user. Manpages (the program documentation files accessed by man) are the frontline help system in any Linux installation. Not only are manpages easily accessible, but the burden on system resources is low, with less than 15 MB of disk space required for all the manpages that are packaged with OpenLinux. Many (but not all) system commands have associated manual pages.

Sidebar :: The Case of the Missing Manpages

Follow these directions to add manpage capability and files to your system if they got left out at installation time. Insert the installation CD-ROM, and enter:

The su program (short for Super User), entered without argument, prompts a user for the root password. Upon authentication, root access is granted until exit is typed, or the virtual terminal is killed. The mount command tells the operating system that it can look for and find valid data on the CD-ROM, and the rpm command (Red Hat Package Management), typed as shown, installs the manpage program and data.

Note that the above loads ALL of the manual pages, in every available language. Select from the man-pages-*rpm files to load only the ones that you want, if desired.

man - Format and display the online manual pages

Usage : man [options] [n] name

|

|

-a |

Display consecutively all of the manpages that match name |

-C |

Specify a different configuration file than /etc/man.conf |

-p |

Use a different paging utility to display manpages |

-c |

Force a reformat of the manual page (used after updates) |

n |

Select a section from which to get manpage |

name |

Topic of manpage, must match exactly |

Example:

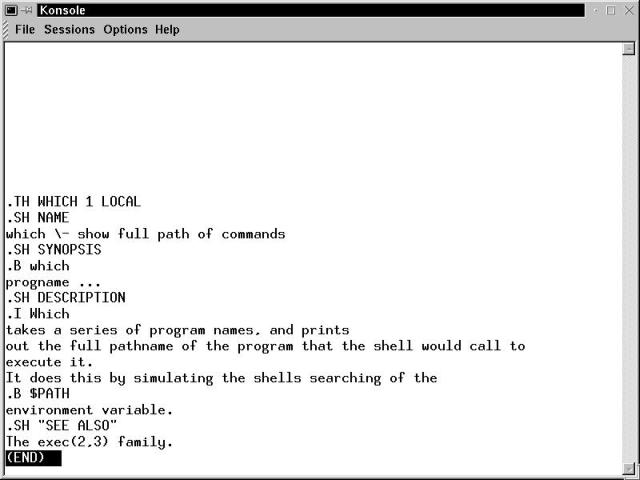

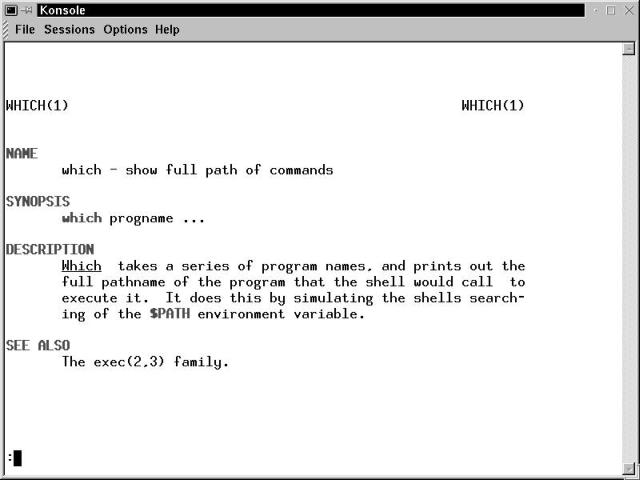

[bilbrey@bobo bilbrey] man man

Figure 17-1 shows the initial terminal window for the example given previously.

Figure 17-1

The man manual page displayed in a Konsole terminal window

Data from manual pages is collected and organized in a file called the whatis database. This database is generated after the installation of your Linux system, since installation options cannot be determined in advance with any accuracy. Tools such as apropos and whatis are used in conjunction with the whatis database to assist the user in locating the correct manual page.

Warning

The GNU/Linux operating system is a highly stable platform, designed and tuned for weeks and months of continuous uptime. Many tools of the system are partially dependent upon the system running fairly continuously. This includes the automatic process that builds the whatis database. If you get an error back typingman man, then look to themakewhatisprogram, described in Chapter 19, to build your manual page database.

Additionally, there are several different sections to the manpages, as listed here. Each section contains the manual pages pertinent to the section description given. Most user commands are documented in Section 1, whereas many of the programs discussed in Chapter 19 are tucked away in Section 8.

Section 1 Shell commands and executable programs

Section 2 Kernel functions (system calls)

Section 3 Programming routines that are not system calls

Section 4 System files and interfaces (usually /dev file info)

Section 5 Configuration file layouts and explanations

Section 6 Manpages for games, related information (joysticks, and so on)

Section 7 Conventions and formats for assorted macro packages

Section 8 System commands (usually for root user only)

Section 9 Documentation for experimental kernel routines

Manual pages for related functions or programs, from different sections, can have the same name (crontab exists in several sections, for example). Select the correct section to read from by specifying the section number just before the page name, as in:

man 5 crontab

There are two crontab pages, in Sections 1 and 5. Typing man crontab only displays the Section 1 manual page. To see multiple manpages, from all sections, type man -a pagename. The -a option displays the available pages sequentially - quit from one page to see the next. This option is useful when you are not sure if there are several pages on hand, nor which one might hold the data you are seeking.

The actual manual pages are stored in a compressed (gzip) format. For instance, the Section 5 crontab manual page used in the previous example is displayed from the following file: /usr/man/man5/crontab.5.gz. When a manual page is displayed, it is uncompressed, then processed for display to the terminal. The uncompressed file is stored in a temporary cache directory for possible future use.

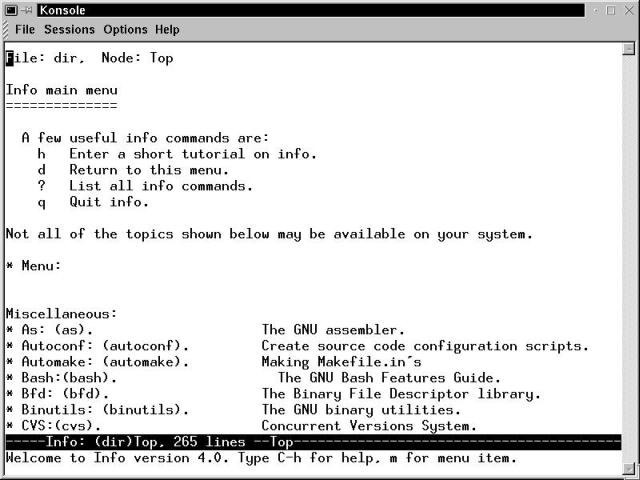

info commandThe other major on-system resource for information is the Texinfo Documentation System, a text-based, hyperlinked help system. The info program is used to access this resource. The program displays information (and links between data) in a manner similar to the hypertext used in Web browsers. The most common question asked about info is, "Why a different hypertext system? Why not use HTML?" That is a good question, since the info interface is a little cryptic at first glance. The simple answer is that info was around long before HTML. The Texinfo interface is based upon the keymap employed by GNU emacs. Most of the info data is also available on the OpenLinux system via the browser interface that KDE provides. However, while working in console mode, you will be glad to know how to navigate info files in order to locate a crucial configuration file that prevents the X Windows server from starting. Starting info from the command line yields the display shown in Figure 17-2.

Figure 17-2

Terminal window with info splash screen visible

To really learn info, start the program and run the tutorial by pressing 'h'. Spend an hour or more finding new and interesting things. When that process is done, the interface has assimilated you. However, if you lack the time to explore, use the following commands inside info to get specific information and remember that typing a '?' displays a list of available commands.

The overall structure of info documentation is like that of an inverted tree. There are many root nodes at the top level - the page that is displayed when info starts. Descend the tree, drilling down into a subject by positioning the cursor on a link, shown as text lying between an asterisk and a colon (in bold, below):

* mkdir: (fileutils)mkdir invocation. Create directories.

Use the Arrow keys to position the cursor anywhere in the text mkdir and press Enter to go to the page requested. Often this leads to another menu of selections, depending on the complexity of the information. The search function permits quick isolation of the correct link or data on the current page. Another way navigation method is to skip from link to link using the TAB key.

Type forward slash (/) to start a search. While there are pattern-matching (regular expression) features in the search function, plain string matching works as well and usually directs the user correctly to the desired information. To survey information about directories, type /director, which will match on the words directory and directories. The first search finishes on the remsync line. Repeat the prior search by simply pressing / followed by Enter. On the third repeat, the cursor is placed on the mkdir line shown previously.

Page Up and Page Down permit vertical navigation on a page. Press u to move back up the tree (from the current document to the parent or calling document). Press q to leave info. If a help window (or other extraneous window) is open, type Ctrl+x 0 (the number zero) to close the window. From the command line, go directly to a page by using a correct keyword: info mkdir

info - Access the Texinfo help system files

Usage : info keyword

|

|

keyword |

Program names provide index key into info documentation |

Interestingly, when there is no Texinfo page on a topic (say, Perl), then typing info perl results in the perl manpage being displayed in an info-formatted screen. This scores points in info's favor, since it non-prejudicially displays the data from the "legacy" manual page help system. Note that the inverse does not hold true - there is no manpage for info.

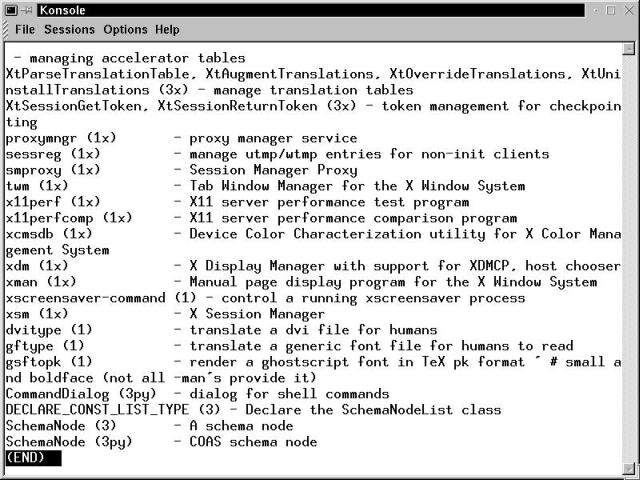

apropos commandWhat are your options when the correct command isn't known? apropos often provides the answer. (Why only most of the time? Well, it depends on the words you choose to search for, for one thing...) The apropos program queries the whatis database, based upon the argument string or keyword provided, and displays a scrollable list of pertinent manpages. Note that specifying a string is not synonymous with specifying a word. To search for specific words in the whatis database look to the whatis command, coming up next.

apropos - Search the whatis database for strings

Usage : apropos keyword

|

|

keyword |

Can be any string (including spaces, if in quotes) |

Figure 17-3 shows the tail of the 344 lines returned by typing apropos man, demonstrating that some searches are a bit ... too broad. The less program (detailed in the section "Working with File, Directory, Permission, and Link Commands") formats the output, allowing the user to search and scroll through the listing. The commands to work with the output generated from the apropos command are few and simple. Page Up and Page Down function to scroll a multi-page apropos listing, pressing slash within a listing initiates a simple text search, and pressing q quits out of the listing.

Figure 17-3

Part of the output from running apropos man.

The problem with the listing in Figure 17-3 is that there is too much information. Unusually for Linux, apropos is not case sensitive. So every entry from the whatis database that includes the string "man" is outputted. In cases like this, we turn to the whatis command for searching on whole words. With a little practice, apropos and it's companion command whatis are extraordinarily useful tools.

whatis commandWhen apropos returns too many results (say, more than two screens full), try narrowing the field by searching on whole words using the whatis command.

whatis - Search the command name field of the whatis database for whole words

Usage : whatis keyword

|

|

keyword |

keyword is the name of a command or program |

Examples:

[bilbrey@bobo bilbrey] whatis man

. . .

man (1) - format and display the on-line manual pages

man (7) - macros to format man pages

man.conf (5) - configuration data for man

[bilbrey@bobo bilbrey] whatis foolish

foolish: nothing appropriate

In the first example above, in a cleared screen, the three results shown are displayed. This is a more useful selection set than the 344 lines of output resulting from apropos man. whatis is most effective for determining which section's manpage to load when a program or function name is already known. When a keyword that is not a program, command, or in the description thereof is used, a result similar to the second command example shown previously is displayed.

locate commandWhen you need to find where a specific file is located - which can be a challenge in a large and complicated directory structure - the locate command can simplify the search.

Useful Information

When you need to locate the files for a newly installed program, package, or tar (tape archive) file, theupdatedbprogram needs to be executed to add the most recent inhabitants to the file name database. To do this,suto root, then executeupdatedb & ; exit. This runs the update in the background, continuing while you exit from superuser status. When the disk stops thrashing (usually several minutes), then the database has been refreshed. This results in a non-discriminating database that includes temporary files and everything else. The version that runs as an automated job every night can be executed by root as well: type/etc/cron.d/lib/update-locatedbto build a smaller database that omits several sub-directories. Examine that script file for details.

locate - List files that match a pattern in the locate database

Usage : locate [-d path | --database=path][--version][--help] pattern

|

|

--database |

Select a different file database than standard / |

--version |

Print locate version number and exit |

--help |

Show a list of valid options, short help and exit |

pattern |

String (or quoted string with metacharacters) to search for |

locate - List files that match a pattern in the locate database

Usage: locate [-d path | --database=path][--version][--help] pattern

--version Print locate version number and exit

--help Show a list of valid options, short help and exit

pattern String (or quoted string with metacharacters) to search for

Example:

[bilbrey@bobo bilbrey] locate XF86Config

/etc/XF86Config

/etc/XF86Config.org

/etc/XF86Config.vm

/opt/kde/shar/apps/lizard/templates/XF86Config

/opt/kde/shar/apps/lizard/templates/XF86Config.test

/opt/kde/shar/apps/lizard/templates/XF86Config.VGA16

/opt/kde/shar/apps/lizard/XF86Config

/usr/X11R6/lib/X11/XF86Config.eg

/usr/X11R6/man/man5/XF86Config.5x.gz

In that example, the current XF86Config file is located in /etc/, along with two backup copies created as system modifications were made. The versions that live in the lizard directory are OpenLinux specific, related to the Lizard installer. The X11R6 results show a generic (rather than distribution-specific) example file, and the XF86Config manpage (which is stored in .gz compressed format).

which command It is possible that there are multiple copies of a program in a Linux installation. While one version of a program is loaded by default when the system is installed, the administrator or a user can add another version or another program of the same name (but of a different revision or function). Which version is going to be executed? The aptly named which searches the current path, looking for the first file that matches the program name given. The output shows you the location of command that is executed when you type the program name.

which - Show the full path of commands

Usage : which program-name

|

|

program-name |

String (or quoted string with metacharacters) to search for |

Examples:

[bilbrey@bobo bilbrey] which man

/usr/bin/man

[bilbrey@bobo bilbrey] which kde

/opt/kde/bin/kde

whereis commandThe whereis program is another tool for locating resources on the system. Binaries (executable programs), sources (program code) and documentation (manpages) can all be located with just one whereis command. There is a range of useful options to modify and enhance whereis operation, as well. Lastly, the caveat at the bottom of the whereis manpage is pertinent:

"whereis has a hard-coded path, so may not always find what you're looking for."

The manpage states that whereis searches in a list of usual Linux places. Taken together, that means the search directories are written into the source code, rather than taken from a configuration file. Each Linux distribution can select a different set of "usual places" when compiling the utility. You can even download, modify, and compile the sources yourself to meet specific needs.

whereis - Locate the binary, source, and manual page files for a command

Usage : whereis [options [directories -f]] filename

|

|

-b |

Search for binaries only |

-m |

Search for manual pages only |

-s |

Search for source files only |

-u |

Search for unusual entries (missing one of the above) |

-B |

Change or limit the binary search directories |

-M |

Change or limit the manpage search directories |

-S |

Change or limit the source search directories |

-f |

Terminate list of -B, -M, -S options |

Examples:

[bilbrey@bobo bilbrey] whereis

whereis [-sbmu] [-SBM dir ... -f] name...

[bilbrey@bobo bilbrey] whereis printf

printf: /usr/bin/printf /usr/include/printf.h /usr/man/man1/

printf1.1.gz /usr/man/man3/printf.3.gz

While there is no explicit help option, typing whereis without options as shown in the first example displays a very short form of assistance. The second command example demonstrates that an executable binary called printf exists in the /usr/bin directory. In addition, the output shows the program's source file (printf.h), and two manpages (printf1.1.gz and printf.3.gz) associated with the printf name. whereis doesn't do a user much good if the command name isn't known to begin with, but is remarkably useful within its scope.

pwd commandThe pwd program is used to answer one of the more common questions encountered while using the command line: "Where am I?" Even seasoned users sometimes become disoriented in the complex structure of directories in a Linux installation. pwd returns the fully resolved path of the current directory in the calling terminal.

Note

pwd is one of those command that is both a GNU program (found at /bin/pwd), and a Bash built-in, as discussed in Chapter 14. Unless you fully specify the path to the GNU executable, you'll be running the Bash version. Fortunately, the results are the same, since we're describing the GNU edition, here.While that explanation may seem overly specific, recall that several terminal windows are often open simultaneously, and each can return a distinct path in response to the pwd command. A fully resolved path is a true path, rather than one that contains symbolic links (see the section "Links", later in this chapter).

pwd - Print name of current/working directory

Usage : pwd [options]

|

|

--version |

Print program version number and exit |

--help |

Show list of valid options, short help, and exit |

Examples:

[bilbrey@bobo bilbrey] pwd

/home/bilbrey

date commandThe date command functions in its simplest invocation to return the current system date and time. There are also a variety of options and formats for printing and setting the system date, depending on a variety of circumstances and requirements.

Unlike other operating systems, Linux is content to have a system (hardware) clock set to Universal Time (UT, formerly GMT). Local date and time information is calculated in interaction with the symbolic link, /etc/localtime, which is a pointer to one of the timezone data files located in /usr/share/zoneinfo. Setting time, date, and timezone is a tricky topic that we handily put off until Chapter 19.

date - Print or set the system date and time

Usage : date [options] ... [+format]

|

|

-d | --date=date |

Date may be given numerically or as a quoted string |

-f | --file=file |

Display like -date for each line in filename |

-r | --reference=file |

Display date and time file was last modified |

-R | --rfc-822 |

Display RFC-822 compliant date string |

-s | --set=string |

Set time as described by string |

-u | --utc |

Display (or set) Universal Time |

--help |

Show list of valid options, short help and exit |

--version |

Print date program version number and exit |

+format |

Display time using the given format |

Examples:

[bilbrey@bobo bilbrey] date

Sun Apr 9 16:38:31 PDT 2000

[bilbrey@bobo bilbrey] date -d '2 days ago'

Fri Apr 7 16:39:57 PDT 2000

[bilbrey@bobo bilbrey] date -d 'Jan 23' +%A

Sunday

[bilbrey@bobo bilbrey] date -d 'Dec 25'

Mon Dec 25 00:00:00 PST 2000

Note in the final example, that with a specified (rather than relative) date, the time information is unset. Two other things can be observed from the final sample. First, Christmas was on a Monday, in the year 2000. Secondly, the time-keeping facilities in Linux understand and track Daylight Savings Time quite well. The characters that define a format string (along with more examples) are listed by typing date --help.

logname commandThe logname command prints the login name of the current user. It's useful in some script applications, or from the command line if you've forgotten which username you're logged in under.

logname - Print the login name of the current user

Usage : logname [options]

|

|

--version |

Print locate version number and exit |

--help |

Show list of valid options, short help, and exit |

Example:

[bilbrey@bobo bilbrey] logname

bilbrey

users commandThe users command queries the /var/run/utmp file to return a listing of the usernames for existing login sessions. If a user is logged in more than once (say by having multiple consoles or terminals running), then that individual's username will appear that many times in the users output.

users - Print the login names of the current users on system

Usage : users [options]

|

|

--version |

Print program version number and exit |

--help |

Show list of valid options, short help, and exit |

Example:

[bilbrey@bobo bilbrey] users

bilbrey bilbrey bilbrey syroid bilbrey

who commandThe who command, executed with no non-option arguments, displays the login name, terminal device data, login time, and the hostname or X display (if applicable).

More Info

Most Linux commands try to interpret arguments that are not options as filenames, so a non-option argument is usually interpreted to be an error. The only non-option argument that is allowed specifies a filename pointer for the data (usually wtmp) to use in place of the utmp file. A two non-option argument directs who to output the information for the current user/device, preceded by the full hostname. This allows the common usage version of the program:who am i.

who - Show who is logged on

Usage : who [options] [filename] [am i]

|

|

--version |

Print program version number and exit |

--help |

Show list of valid options, short help and exit |

Examples:

[bilbrey@bobo bilbrey] who

syroid tty3 Apr 16 15:35

bilbrey :0 Apr 16 14:51 (console)

bilbrey pts/0 Apr 16 14:51

bilbrey pts/1 Apr 16 15:04

[bilbrey@bobo bilbrey] who am i

bobo.orbdesigns.com!bilbrey pts/0 Apr 16 14:51

Warning

The on-system documentation for the who command, found by typinginfo who, is outdated on the 2.4 version of OpenLinux. The correct locations for the files mentioned in the "More Info" box above are /var/run/utmp and /var/log/wtmp. There are several gotchas like this in the file location schemes, as the Linux Standards Base guidelines are being followed in advance of the documentation. When other routes fail, use thelocatecommand to help determine actual file location.

w commandEven for a system like GNU/Linux, which is sometimes disparaged for having short, cryptic commands, w is a bit over the top. Typing w displays a superset of the information rendered by the who program.

w - Show who is logged on

Usage : w [options] [user]

|

|

-h |

Suppress printing of the listing header row |

-u |

Ignore user name while calculating JCPU and PCPU |

-s |

Short format: Skip login time, JCPU, and PCPU |

-f |

Toggle FROM field printing (suppress for OpenLinux) |

-V |

Show version information only |

user |

Display rows pertaining only to specified user |

The JCPU column lists total processor time for all currently running foreground and background processes. PCPU lists processor time for the current process (listed in the WHAT column) only.

Examples:

[bilbrey@bobo bilbrey] w

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

syroid tty3 - 3:35pm 1:07m 0.31s 0.15s top

bilbrey pts/0 - 2:51pm 0:23m 1.02s 0.07s man w

bilbrey pts/1 - 5:39pm 0.00s 0.28s 0.08s w

bilbrey pts/2 grendel 5:23pm 0:17m 0.31s 0.15s -bash

Several points of interest come out of the previous example. The third output line shows the process and login for the terminal that generates the output - you can see the w in the WHAT column. The fourth line reflects a remote login from another machine: grendel in the FROM column. As with previous examples, user syroid is logged in at a virtual console, via device tty3. All the other logins are virtual terminal sessions, denoted by the pts/n in the TTY column.

id commandThe id command is a tool for determining the user ID (UID) and group ID (GID) information about a user, as well as any supplementary groups that the user may belong to. Without arguments, id prints information about the current user.

id - Print real and effective UID and GID

Usage : id [options] [username]

|

|

-g | --group |

Display only the GID |

-G | --groups |

Display only supplementary groups |

-n |

Display name rather than number for -ugG options |

-r | --real |

Display user's real ID for -ugG options |

-u | --user |

Display only the UID |

--help |

Show short form help and exit |

--version |

Show version information and exit |

username |

|

Examples:

[bilbrey@bobo bilbrey]$ id uid=500(bilbrey) gid=100(users) groups=100(users) [bilbrey@bobo bilbrey]$ id syroid uid=501(syroid) gid=100(users)

echo commandThe echo command is commonly used in script files to display status or updates to standard output or standard error, as we shall see in Chapter 18. Additionally, echo is a handy diagnostic tool that permits easy examination of the shell variables affecting the current user. Again, we're describing the GNU utility, although the Bash built-in echo is actually what's executed by default, to the same effect.

echo - display a line of text

Usage : echo [options] string

|

|

-e |

Enable expansion of backslash quoted characters |

-n |

Suppress trailing newline character |

--help |

Show short form help and exit |

--version |

Show version information and exit |

string |

Text string to be sent to standard output |

Examples:

[bilbrey@bobo bilbrey]$ echo -n 'This is IT!!!'

This is IT!!![bilbrey@bobo bilbrey]$

[bilbrey@bobo bilbrey]$ echo $HISTFILE

/home/bilbrey/.bash_history

[bilbrey@bobo bilbrey]$ echo '$TERM'

$TERM

[bilbrey@bobo bilbrey]$ echo "My terminal setting is $TERM"

My terminal setting is xterm

In the first example, the -n option prevents a newline from being echoed to the display, which puts the next command prompt on the same line as the output from the echo command. The second example demonstrates using the echo program to examine the contents of a single shell variable. From Chapter 14, the leading $ on the variable tells the bash shell to expand the variable to its value, if it exists. In the last two examples, using the single (aka strong) quotes around a shell variable prevents the expansion; whereas the double quotes permits string echoing with variable expansion.

printenv commandThe printenv program displays environment variables. Additionally, the listing can be limited by specifying which variables to print. The documentation for printenv claims that when run without argument, printenv displays most environment variables.

More Info

set, a bash built-in command listed in Chapter 15, also displays environment variables, and lists Bash specific variables about whichprintenvknows nothing.

printenv - Print all or part of environment

Usage : printenv [options] [variable...]

|

|

--help |

Show short form help and exit |

--version |

Show version information and exit |

variable |

Text string to be sent to standard output |

Example:

[bilbrey@bobo bilbrey]$ printenv PATH

/bin:/usr/bin:/usr/local/bin/usr/X11R6/bin:/opt/bin:/opt/teTex/

bin:/opt/kde/bin:/usr/java/bin

Filesystems are composed of files. While that may seem a tad obvious, the filesystem is the foundation upon which an OS is based. Surely one doesn't need files to do lots of interesting things? True, but if you want a record of those interesting things, then the results are going to be written somewhere . . . in a file.

Physical disks are quickly rotating metal platters covered with a magnetic film. Binary information is written to the magnetic media. How that binary data is organized is the job of a filesystem. For most current GNU/Linux systems, that filesystem is e2fs (Extended 2 File System).

Under e2fs, every partition is formatted into groups, each of which contains key information that can be used to recover the vast majority of the disk's information should something drastic happen to the drive. Groups are divided into blocks, normally of 1,024 bytes in size.

e2fs is an inode-based filesystem. This means that every file on the disk is associated with exactly one inode. In the same manner that a URL such as calderasystems.com is translated by nameservers on the Internet into the IP address for the Caldera Systems Web server (207.179.18.130), the inode for the file /home/bilbrey/Chapter23 on this system, at this time, is 104928. Both names are equally valid, but text works better for us humans, and the numeric form works better for the computer. An inode stores pertinent file information such as filename, creation and modification times, file type, permissions and owner, and the location of the data on the disk. When a partition is formatted, the number of groups, blocks, and inodes are set. These cannot be changed without reformatting the partition.

Almost all of the commands that appear in this section work with files of one type or another (yes, directories are just special files). We start with ways to list files and get information about them.

ls commandThe ls command is one of the core commands for every linux user, and is used to list the files in a directory. The program also has a rather extensive list of options for selecting, sorting, and formatting the output data. There are many more options than the ones we find most useful - see man ls for more details.

ls - List directory contents

Usage : ls [options...] [filename...]

|

|

-a | --all |

Show entries starting with . ("hidden" files) |

-c |

Sort by change time (by creation time with -l) |

--color[=WHEN] |

Specify use of color in listings: never, always, or auto |

-d | --directory |

List directory entries rather than contents |

-h | --human-readable |

Format file sized in human terms 100K, 2.5MB, and so on. |

-i | --inode |

List the filesystem inode number associated with filename |

-l |

Display using long listing format (detailed) |

-r | --reverse |

Invert order of any sorted listing |

--sort=WORD |

Sort entries based upon extension, none, size, version, status, time, atime, access, or use |

-U |

Do not sort output, display in directory order |

--help |

Display help and exit |

--version |

Show version information and exit |

filename |

May be any filename, directory name, or wildcard pattern |

Examples:

[bilbrey@bobo bilbrey]$ ls

Chapter01 Chapter03 Chapter05 Chapter07 Chapter09 Chapter11

Chapter02 Chapter04 Chapter06 Chapter08 Chapter10 Chapter12

[bilbrey@bobo bilbrey]$ ls -al .bash*

-rw-r-r-- 1 bilbrey users 1408 Apr 17 21:09 .bash_history

-rw-r-r-- 1 bilbrey users 49 Nov 25 1997 .bash_logout

-rw-r-r-- 1 bilbrey users 577 Jul 8 1999 .bashrc

[bilbrey@bobo bilbrey]$ ls -il .bash_history

104898 .bash_history

rm commandWhen you are done with a file, delete it. Easy, right? rm (for remove) will delete files for which the calling user has write permissions. Also, there are options to provide interactive feedback during rm processing (handy backstop for the new user).

rm - Remove files or directories

Usage : rm [options...] filename...

|

|

-f | --force |

Ignore non-existent filenames, never prompt |

-i | --interactive |

Prompt before each removal |

-r | --recursive |

Delete directory contents recursively |

-v | --verbose |

Display each action as done |

--help |

Display help and exit |

--version |

Show version information and exit |

filename |

May be any filename, directory name, or wildcard pattern |

WARNING

The last option given rules the day. If an option string ends inf, then the action will be forced, with a priori(interactive) option being overridden. We have been caught by this and so will you. One of the most dangerous commands the root user can run:rm -rf /, which deletes the entire file system. Don't do that unless you're planning on reinstalling, and all of your data is backed up, or you are clinically ... well, anyway, just don't do that, OK?

Examples:

[bilbrey@bobo bilbrey]$ rm -i Chapter?1

rm: remove 'Chapter01'? y

rm: remove 'Chapter11'? y

[bilbrey@bobo bilbrey]$ rm -rfv total

removing all entries of directory total

removing total/sum

removing total/dividend

removing the directory itself: total

The first example uses the ? wildcard to select all files that begin with "Chapter", have any character following that, prior to a closing "1". The -i option enables the per-file prompting seen in the example. The second example demonstrates recursion, forcing and verbose output.

You can exercise caution by creating an alias for rm - putting the following line in your .bash_profile: alias rm='rm -i'. Then, from every login session thereafter, the rm command will be run in interactive mode, which acts as a safety checkpoint, rather like training wheels until you get tired of them. Then you'll disable that one day, and remove a directory tree that you didn't intend... You are keeping and testing your backups, right?

cp commandTo copy files from one place to another, use the cp command. The function is reasonably similar to that of the copy command that exists under DOS, with one caveat: There must always be a specified destination, even if that destination is as simple as . (as in the period character), signifying "right here."

cp - copy files and directories

Usage : cp [options...] source [...] destination

|

|

--a | --archive |

Preserve permissions and structure as makes sense |

-b | --backup |

Make backup copies of files about to be overwritten |

-f | --force |

Forced overwrite at destination, no prompt |

-i | --interactive |

Prompt before overwrite, inverse of --force |

-p | --preserve |

Preserve permissions rigidly, including ownership |

-R | --recursive |

Use recursion to copy directory structures |

-u | --update |

Do not overwrite if destination is more recent |

-v | --verbose |

Display name of each file as copy executes |

--help |

Display help and exit |

--version |

Show version information and exit |

source |

May be any filename, directory name, or wildcard pattern |

destination |

Filename for single file source, directory otherwise. |

Example:

[bilbrey@bobo bilbrey]$ cp -bi Chapter* ~/book

cp: overwrite 'book/Chapter01'? y

cp: overwrite 'book/Chapter02'? y

* * *

[bilbrey@bobo bilbrey]$ ls book/Chapter01*

book/Chapter01 book/Chapter01~

The example creates a backup copy of the original files (-b) and prompts prior to overwriting the original (via -i). The destination directory ~/book, in this case is the bash shell short hand for /home/bilbrey/book - the leading tilde, slash indicates something in the current user's home directory. On the other hand, the trailing tilde in the output of the ls command indicates that Chapter01~ is the backup copy created when the cp command was executed.

dd commandTo copy bits between devices and files, dd is a very handy utility. Most GNU/Linux distributions have associated utilities for creating boot, root, and rescue floppies. The utility allows a user (as root) to write floppy disks directly from disk image files. Additionally, dd can be used to create bit-wise copies of any information, useful when dealing with file types and file systems that are not native to Linux.

dd - Convert and copy a file

Usage : dd [option]...

|

|

bs=bytes |

Sets input (ibs) and output (obs) byte counts |

cbs=bytes |

Convert BYTES at a time |

conv=keywords |

Conversion from, to (keyword, keyword) list |

ibs=bytes |

Input block size in bytes |

if=filename |

Specify an input filename (instead of STDIN) |

obs=bytes |

Output block size in bytes |

of=filename |

Specify an output filename (instead of STDOUT) |

seek=blocks |

Block count to skip prior to writing output |

skip=blocks |

Block count to skip prior to reading input |

--help |

Display help and exit |

--version |

Show version information and exit |

The keywords are ascii, ebcdic, ibm, block, unblock, lcase, notrunc, ucase, swab, noerror, and sync (these are described in detail on the dd manual page). An example of the form given previously, creating a boot floppy, is demonstrated in the command line that follows (permissions apply - the root user would have to issue the following command to write to the floppy device directly):

[bilbrey@bobo bilbrey]$ dd if=/root/boot.img of=/dev/fd0 bs=512 sync

This command gets data 512 bytes at a time from the /root/boot.img file, and writes it to the "A" drive (drive 0 on the floppy chain). The sync option pads every write out to 512 bytes with nulls to ensure full sector writes. Usually this is only useful on the final sector write, to ensure that pre-existing data is overwritten properly.

mv commandThe mv program moves files and directories. Additionally, this is the command used to rename objects, as a specialized form of movement.

Numbered Backups

Add the following line to ~/.bash_profile:export VERSION_CONTROL=numbered. When this environment variable is set, numbered backups are created using themvandcpcommands with the-boption. While this feature has the ability to quickly fill drive partitions, you never lose data if you make backups with version control.

mv - Move (rename) files and directories

Usage : mv [options...] source [...] destination

|

|

-b | --backup |

Make backup copies of files about to be overwritten |

-f | --force |

Forced overwrite at destination, no prompt |

-i | --interactive |

Prompt before overwrite, inverse of --force |

-u | --update |

Do not overwrite if destination is more recent |

-v | --verbose |

Display name of each file as copy executes |

--help |

Display help and exit |

--version |

Show version information and exit |

source |

May be any filename, directory name, or wildcard pattern |

destination |

Filename for single file source, directory otherwise |

Example:

[bilbrey@bobo bilbrey]$ mv -bi Intro ~/book

mv: overwrite 'book/Intro'? y

[bilbrey@bobo bilbrey]$ ls ~/book/Intro*

book/Intro book/Intro.~1~ book/Intro.~2~

As with the copy operation noted previously, ~/book represents a directory called book which is in the /home/bilbrey directory. Once the move operation is complete, with the backup (-b) and interactive prompt (-i) options enabled, the ls command displays the results of the move, showing the moved file and the two backup copies that exist.

The move operation has a number of distinct behaviors depending upon the specification of the source and destination. Overwriting is enabled by default in most GNU/Linux installations -- use the -b or -i options to protect against unanticipated data disappearance.

file --> filename Rename file to filename, overwrite if filename exists

file --> dirname Move file into directory, overwrite dirname/file if existing

dir --> dirname Move dir into dirname as a sub-directory, overwrite dirname/dir if it exists.

touch commandFrequently called upon by programmers and power users, touch updates a file's access and modification time to the current system time, by default creating the file if it doesn't exist. This is useful for a variety of reasons - for instance, there are other system tools such as make (a programmer's tool) that rely on file modification times to provide guidance on program operations. Another application family that uses timestamps are backups. touch a file to ensure it gets included in the current incremental backup, for example.

touch - Change file timestamps

Usage : touch [options...] file...

|

|

-a |

Change file access time only |

-c |

Do not create file if non-existent |

-d | --date=STRING |

Parse and use STRING instead of current time |

-m |

Change file modification time only |

--help |

Display help and exit |

--version |

Show version information and exit |

file |

May be any filename, directory name, or wildcard pattern |

Example:

[bilbrey@bobo bilbrey]$ ls Chapter3*

Chapter30.txt

[bilbrey@bobo bilbrey]$ touch Chapter31.txt

[bilbrey@bobo bilbrey]$ ls Chapter3*

Chapter30.txt Chapter31.txt

The example shows a before and after listing. The touch command created the Chapter31.txt file. Another common use of the touch utility is to update the date/time stamp on a source code file, to ensure that its contents will be included when the code is compiled.

head commandThe head program displays the first part (or head) of a file or files. head is often used for a quick glance, to determine whether or not to edit the file. By default, the first ten lines of each file argument are listed, including a filename header when multiple file arguments are given.

head - Output the first part of files

Usage : head [options...] file...

|

|

-c | --bytes=N |

Print number of bytes equal to N |

-n | --lines=N |

Print number of lines equal to N |

-q | --quiet |

Suppress printing header information |

-v | --verbose |

Always print headers, even for single file arguments |

--help |

Display help and exit |

--version |

Show version information and exit |

file |

May be any filename or wildcard pattern |

Example:

[bilbrey@bobo bilbrey]$ head -vn4 Chapter17

==> Chapter17 <==

Now we embark on a trek through some most common user commands.

There are over 1800 commands available to the user of an OpenLinux

System with all packages installed. This chapter addresses a small

fraction of that number. However, we will show you how to get started,

tail commandThis command is used to view part of one or more files; the bottom part. Like head, ten lines is the default chunk displayed. However, tail has an additional feature that head doesn't: the follow option. Follow directs tail to keep monitoring the file, displaying new lines as they are appended to the file. This behavior is especially useful when monitoring log files, a practice discussed in Chapter 21.

tail - Output the last part of files

Usage : tail [options...] file...

|

|

-c | --bytes=N |

Print number of bytes equal to N |

-f | --follow |

Output appended data as the file grows |

-n | --lines=N |

Print number of lines equal to N |

-q | --quiet |

Suppress printing header information |

-v | --verbose |

Always print headers, even for single file arguments |

--help |

Display help and exit |

--version |

Show version information and exit |

file |

May be any filename or wildcard pattern |

Example:

[bilbrey@bobo bilbrey]$ tail -vn4 Chapter14

==> Chapter14 <==

all the power of command line processing available. There

are advantages to working with multiple command line views,

as we shall see in Chapter 18. In this chapter, we examined

the fundamentals of consoles, terminals, shells and bash.

The more command

more is a simple paging filter. It displays the content of its arguments to the terminal or console, one page at a time. Additionally, there are a number of options to control the operation of more while it is displaying data. Finally, more automatically terminates when the end of the file is displayed (the last file, if more than one is specified on the command line).

Note

A filter is a program or tool that takes input, modifies it in some way, and sends the results to standard output (usually a console or terminal window). Filters may be used on a stand-alone basis, or string together via redirection to create complex data processing paths.

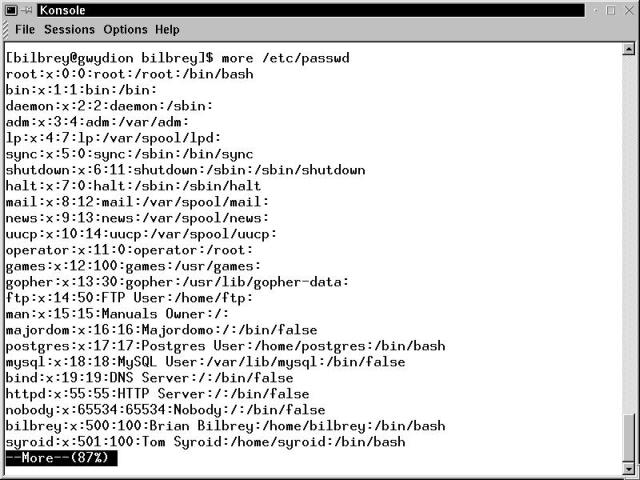

Figure 17-4 shows a terminal window displaying the results of the command more /etc/passwd. The status line at the bottom of the display shows the percentage of the file that has been viewed.

Figure 17-4

Terminal window with output from the more filter

more - File perusal filter for crt viewing

Usage : more [options...] [+/ pattern] [+linenum] file...

|

|

-N |

Specify the number of lines (screen size) |

-d |

Helpful mode: provide useful user prompts in operation |

-l |

Suppress special treatment of the form feed character |

-f |

Count logical, rather than screen lines |

-s |

Squeeze multiple blank lines into one |

-u |

Suppress underlining |

pattern |

Either a simple search string or regular expression |

linenum |

Integer target line number to display |

file |

May be any filename or wildcard pattern |

Table 17-1 lists the commands that are operative while the more filter is running.

Table 17-1

Interactive Commands for more

| Command | Description | |

h | ? |

Display command summary (these commands) | |

Space |

Display next K lines, default to screen size | |

K z |

Display next K lines, default to screen size, K becomes new default | |

K Enter |

Display next K lines, default to 1, K is new default | |

K d | K Ctrl + D |

Scroll K lines, default is 11 lines, K is new default | |

q | Q | Ctrl + C |

Exit (Quit) | |

K s |

Skip forward K lines of text | |

K f |

Skip forward K full screens of text | |

K b | K Ctrl + B |

Skip back K full screens of text | |

' |

Go back to place where previous search started | |

= |

Display current line number | |

/pattern |

Search for pattern (string or regex) | |

Kn |

Search for the Kth occurrence of pattern (default 1) | |

!command | :!command |

Execute command in a sub-shell (then return to more) | |

v |

Start the vi text editor, cursor on current line | |

Ctrl + L |

Redraw the terminal or console window | |

K:n |

Go to Kth next file (default to 1) | |

K:p |

Go to Kth previous file (default to 1) | |

:f |

Display current file name and line number | |

. |

Repeat previous command |

less filterless is described in the system documentation as the opposite of more. This is rather tongue-in-cheek, as less is generally regarded as an extremely capable paging tool, with an option and feature set that greatly exceeds that of the more filter. less is the default paging tool for OpenLinux. man and other programs use less, as it is specified by the PAGER shell variable.

The options and commands as described above for more, work for less. Additionally, the cursor keys (arrows, Page Up, Page Down) are useful for file navigation. One of the main advantages of less is its facility for moving and searching backwards (towards the top of the file). For full details on the less filter, view the manual page.

gzip utilitygzip is a file compression utility that uses Lempel-Ziv coding. The tar command (below) can dynamically call gzip during tar's operation - this is the most common use of gzip. gunzip, the complementary program, is simply a call to gzip with the -d option active. The third related program is zcat, which decompresses manpages in preparation for display. gzip uses CRC (Cyclical Redundancy Checks) to verify data integrity.

gzip - compress or expand files (also gunzip and zcat)

Usage : gzip [options...] filename ...

|

|

-d | --decompress |

Decompress file |

-f | --force |

Compress or decompress, even if overwriting will occur |

-l | --list |

List compressed, uncompressed sizes, ratios and filenames for an existing .gz file specified by filename |

-L | --license |

Display the gzip license and exit |

-r | -- recursive |

If a directory argument is given, compress directory contents recursively |

-t | --test |

Check compressed file integrity |

-v | --verbose |

Display name and percent reduction for each file |

-N | --fast | --best |

Specify N from 1 (--fast) to 9 (--best) in tradeoff of execution speed version compression efficiency |

-h | --help |

Display help and exit |

-v | --version |

Show version information and exit |

filename |

List contents of, compress to, or decompress from filename |

Example:

[bilbrey@bobo test]$ ls -l

total 39

-rw-r-r-- 1 bilbrey users 38646 Apr 19 20:02 gzip_test

[bilbrey@bobo test]$ gzip -v gzip_test

gzip_test: 63.4% -- replaced with gzip_test.gz

[bilbrey@bobo test]$ ls -l

total 15

-rw-r-r-- 1 bilbrey users 14161 Apr 19 20:02 gzip_test.gz

Called interactively, gzip compresses and expands in place. The preceding example shows the original file and related information with the first ls -l command. The gzip command that follows is invoked using the verbose option to display the compression ratio as well as confirm that the original uncompressed file has been deleted and replaced by the compressed file. The final ls -l command is to confirm the contents of the directory as well as the file size reduction. The file gzip_test was a simple ASCII file, and achieved average compression ratios. A large uncompressed binary file (say a TIFF format graphics file) can reach compression ratios over 95%.

bzip2 utilitybzip2 is a newer file compression utility which has recently become part of most distributions. Some publishers (like OpenLinux) ship bzip2; other vendors do not include it as part of the standard distribution, but make it available as a supplemental package. bzip2 uses a block sorting compression algorithm in concert with Huffman coding to make for an extremely efficient compression, generally much better than gzip. A damaged bzip2 file can sometimes be recovered with the bzip2recover utility. The command line options are very similar (but not identical) to those of gzip.

bzip2 - a block sorting file compressor (also bunzip2 and bzcat)

Usage : bzip2 [options...] filename ...

|

|

-d | --decompress |

Decompress file (bzip2 -d == bunzip2) |

-z | --compress |

Compress file (bunzip2 -z == bzip2) |

-c | --stdout |

Compress/decompress to stdout (like bzcat) |

-f | --force |

Compress or decompress, even if overwriting will occur |

-t | --test |

Check compressed file integrity |

-k | --keep |

Don't delete input files during compress/decompress |

-s | --small |

Reduced memory footprint for low RAM (< 8MB) |

-v | --verbose |

Display name and percent reduction for each file |

-N |

Specify N from 1 to 9 - 9 is the default and reflects the largest block size - use smaller numbers for low RAM |

-h | --help |

Display help and exit |

-v | --version |

Show version information and exit |

filename |

File(s) to compress |

Example:

[bilbrey@bobo test]$ ls -l

total 3071

-rw-r-r-- 1 bilbrey users 2983659 Apr 19 20:02 dick.tif

-rw-r-r-- 1 bilbrey users 51836 Apr 19 20:02 harry.tif.bz2

-rw-r-r-- 1 bilbrey users 92514 Apr 19 20:02 tom.tif.gz

The preceding example shows three files, all of which were identical at the beginning of testing. The top file, dick.tif is an uncompressed tiff file, nearly 3M in size. The bottom file, tom.tif.gz is gzip'ed, which yielded a compression ratio of 96.9 percent. By contrast, the file harry.tif.bz2, compressed by bzip2, reflects a compression ratio of 98.2 percent.

Where does this small change in compression ratios make a real difference? The Linux kernel, for one. A gzipped 2.4.4 kernel is 25.1 MB. The comparable bzipped file is just 20.4 MB. That's nearly 5 MB difference, quite a lot over a dialup line. This argument applies for lots of network-based large software package distribution scenarios.

tar utilitytar is the predominant utility for archiving files, both for backup and distribution purposes. tar packs many individual files into a single archive file, preserving file ownership, permissions, and directory structure. The name tar is a contraction of Tape ARchive, owing to the program's background in writing to backup tapes. tar is gzip-aware - you can dynamically compress and decompress archives as tar reads and writes by including the -z option. This creates the commonly seen name.tar.gz files seen anyplace Linux programs are archived.

Today, tar files are used to distribute programs across the Internet and to make compressed local and remote backup files (including full system images). Specific files, and/or whole directory sub-trees may be archived, depending upon whether paths or files are arguments to tar. The backup scheme we used to ensure that this book's data was safe, in multiple locations, involved using tar to make archives of all the text and images generated. We use tar every day.

tar - The GNU version of the tar archiving utility

Usage : tar options... tarfile pathname | filename ...

|

|

| One (and only one) of the following options must be specified: | |

-A | --concatenate |

Append tar files to an archive |

-c | --create |

Create a new tar archive |

-d | --diff |

Find differences between system and archive contents |

--delete |

Delete from archive (not used with tape devices) |

-r | --append |

Append files to an existing archive |

-t | --list |

List tar archive contents |

-u | --update |

Append files newer than those in archive |

-x | --extract |

Extract files from an archive |

| Additional tar options include: | |

-f | --file F |

Specify tar archive file or device (a usual option) |

--checkpoint |

Print directory names as they are traversed |

-g | --incremental |

Incremental backup (new GNU format) |

-h | --dereference |

Copy files that links point to, rather than links |

-k | --keep-old-files |

Suppress overwrite of existing files on extract |

-K | --tape-length N |

Change tapes after N*1024 bytes written |

-M | --multi-volume |

Work with multi-volume (usually tape) archive |

--totals |

Print total bytes written with --create |

-v | --verbose |

List each file as it is processed |

-z | --gzip |

Filter the archive through the gzip utility |

-P | --absolute-paths |

Absolute path - do not strip leading slash from paths |

--help |

Display help and exit |

--version |

Show version information and exit |

tarfile |

Path and filename for tar archive |

pathname | filename |

Files and directories to archive (or extract) |

Example:

[bilbrey@bobo bilbrey]$ tar -zcvf ols.tar.gz Intro Ch*

Intro

Chapter01

Chapter02

* * *

Chapter29

The preceding example creates (-c) a new archive, called ols.tar.gz. By convention tar archives have a .tar file extension, and gzip files have a .gz file extension. One may find that gzipped tar files also have the "combo" extension of .tgz. Since we also specify that the archive be filtered through gzip (-z), the archive has the form given. The -v (verbose) option is included to give visual feedback that the correct files have been specified.

When tar archives are restored, as with the command tar -zxf ols.tar.gz, the files are placed into the file system with the path information that they were archived with. So the ols.tar.gz will unpack into whichever directory it is placed into, without creating a directory structure. If the original tar command had been typed as:

tar -zcvf ols2.tar.gz /home/bilbrey/Intro /home/bilbrey/Ch*

Then the files would have been archived (with the leading slash stripped) in the form home/bilbrey/Intro, and so on. When the ols2 archive is unpacked, it creates a home/bilbrey/* directory structure in the current directory.

Tip

As an archive tool, tar has one major shortcoming: a lack of error-checking as it works. If you get a corrupted block in a tarball, everything from that block on is corrupted. cpio is much smarter in this respect. That said, tar is still much more commonly used, for some reason. See the cpio manual page for all the details.

md5sum commandThere is often a need to generate a securely unique checksum for a file or package. md5sum computes a MD5 "Message Digest" or "fingerprint" for each file specified. Checksums are used to validate information transferred across the Internet, and to confirm that file contents are the same as at the time that an original fingerprint was created.

More Information

The RFC (Request for Comment) that defines the MD5 algorithm, its functions, and usages, is found at http://www.cis.ohio-state.edu/htbin/rfc/rfc1321.html. RFC1321 is an informational document (rather than an Internet standard) that describes MD5 as a message-digest algorithm that accepts an input of arbitrary size and produces a 128-bit "fingerprint." The theoretical odds of two random inputs generating the same message-digest are approximately 3.4 x 1038. MD5 is only about eight years old and the copyright is held by RSA Data Security, Inc.

md5sum - compute and check MD5 message digests

Usage : md5sum [options...] filename ...

|

|

-b | --binary |

Read file in binary mode |

-c | --check |

Check MD5 sums against given list (usually a file) |

-t | --text |

Read file in text mode (default) |

--status |

Suppress all output, use exit status code (with verify) |

-w | --warn |

Warn about improper lines in checksum file (with verify) |

-h | --help |

Display help and exit |

-v | --version |

Show version information and exit |

filename |

File(s) to compute checksum |

Example:

[bilbrey@grendel Linux-ix86-glibc21]$ md5sum --check SUMS.md5sum BugReport: FAILED Install: OK * * extract.exe: OK [bilbrey@grendel Linux-ix86-glibc21]$ md5sum BugReport 5f3688f3c1b1ad25f4cc82d753a3f633 BugReport

When XFree86 4.0 was first released, in March of 2000, we fetched the binaries to begin running tests. The first task was to check that the files had downloaded properly by using md5sum to confirm that the files had the same fingerprint as the files listed in SUMS.md5sum. This is the preceding example. We explicitly "damaged" the BugReport file to generate the error in the first line of the output (by adding a blank line to the top of the file).

find commandThe info documentation about find says "... how to find files that meet criteria you specify and how to perform various actions on the files that you find." Fundamentally, find permits very explicit specification of the features of files to be found, from date and size ranges, to file magic numbers (file type identifiers) and locations. Once found, files can be opened for editing, added to archives, or any number of other functions that make sense to perform on files.

find - Search for files in a directory hierarchy

Usage : find [path...] expression

|

|

-daystart |

Measure times from start of today, rather than 24 hours ago |

-depth |

Process directory contents before directory |

-follow |

Dereference symbolic links (look at linked files) |

-maxdepth N |

Descend no more than N (>0)directory levels |

-mindepth N |

Start looking (apply expressions) N directory levels down |

-mount |

Compatibility option, same as -xdev |

-xdev |

Don't descend directories on other filesystems (mounted) |

-v | --version |

Show version information and exit |

path |

Specify where to search (omitted/default: current directory) |

The expression part of find's usage is feature-packed and is documented in great detail in the info documentation (type info find).

Example:

[bilbrey@bobo bilbrey]$ find . -maxdepth 1 -mtime -1

./.wmrc

./.xsession-errors

* * *

./Chapter16

./newest

The preceding example finds all files in the current directory (.), only in the current directory (-maxdepth 1) which have been modified in the last 24 hours (-mtime -1). The output of the find command may be piped into the tar command, or into a text file to show your boss (or yourself) what you've worked on in the last day, for example, or to select a group of files for backup.

Hint

Find has an extraordinarily rich functionality. We strongly recommend reading through the info documentation to find the wide variety of examples and applications for this utility. Typeinfo findto get started.

Directories are simply special types of files, whose contents are lists of other files, along with pointers to the inodes (partition locations) that contain information about each file within the directory. There are special features of directories that prevent normal users from treating them as files. So, a directory may not be directly edited, and there are checks in place against easily deleting them, as all of the structure below is removed when a directory is erased. However, as we noted in the beginning of the Help and System Information Commands section, there's nothing that prevents you from using the rm -rf [filespec | directory] command or others similar to shoot yourself in the foot, quicker than quick. The following commands work explicitly with the directory file type.

mkdir commandDerived from "make directory", mkdir provides directory creation services.

mkdir - make directories

Usage : mkdir [options...] directory ...

|

|

-m |--mode=MODE |

Set mode (-umask, see Permissions) for new directory |

-p | --parents |

Create any intervening non-existent directories required |

--verbose |

Print message for each created directory |

-h | --help |

Display help and exit |

-v | --version |

Show version information and exit |

directory |

Name of directory(s) to create |

Examples:

[bilbrey@bobo bilbrey]$ mkdir --verbose one/two/three mkdir: cannot make directory 'one/two/three': No such file or directory [bilbrey@bobo bilbrey]$ mkdir --verbose --parents one/two/three created directory 'one' created directory 'one/two' mkdir: created directory 'one/two/three'

In the first example, mkdir fails, claiming no such file or directory. This is due to the requested directory creation requiring a pre-existing path called /home/bilbrey/one/two in which to create directory three. In the second example, by adding the --parents option, the intervening directories are created.

rmdir commandThe complement to the mkdir program, rmdir removes empty directories only. Non-empty directories are dealt with (carefully, carefully) using the rm -rf command (described earlier in this chapter).

rmdir - Remove empty directories

Usage : rmdir [options...] directory ...

|

|

-p | --parents |

Remove explicit parent directories that are emptied |

--verbose |

Print message for each directory removed |

-h | --help |

Display help and exit |

-v | --version |

Show version information and exit |

directory |

Name of directory(s) to remove |

Examples:

[bilbrey@bobo bilbrey]$ rmdir --verbose one/two/three

rmdir: removing directory, one/two/three

[bilbrey@bobo bilbrey]$ rmdir --verbose --parents one/two

rmdir: removing directory, one/two

rmdir: removing directory, one

As with the --parents option in mkdir, this option, demonstrated in the second example, permits rmdir to delete the parent for each deleted directory, as long as the parent is also empty after the child directory is removed.

The following message text indicates a problem with file or directory ownership or permission:

Permission Denied

What a user can do, which files may be read, written or executed, all of this and much more depends on the read, write and execute permission attributes set for each file and directory. Permissions and ownership are powerful, if sometimes perplexing, features built deeply into the multi-user environment of GNU/Linux. This complex system has many interlocking directories, programs, and systems. The resulting structure needs a basis for protecting information and data. Permission and ownership provide such a foundation.

Ownership is fairly straightforward. If you own something, you can do anything you want to it: delete a file, deny read access (even to yourself), anything. If you don't own something, then the owner controls all access, period. Root and various other system-defined users own most of the contents of the filesystem. This is as it should be. System security, including the security of Internet connectivity with Linux, depends upon correct ownership and permissions.

Additionally, a file or directory may be associated with a group (such as users). Groups are an extension of the filesystem attributes, to permit users to be aggregated (grouped) and permissions granted to groups, rather than having to grant (or deny) individual access. Group and user management, as well as the chown utility (to change file ownership - a root user function), are addressed in Chapter 19.

Permission, on the other hand, is just a little tricky. It has different effects, depending upon the target: files or directories. There are three types of permission: read, write, and execute. These permission types can be applied to the owner, group, or other. Other is the collection of users not equal to owner or group.

[bilbrey@bobo bilbrey]$ ls -l newfile

-rw-r--r-- 1 bilbrey users 0 Apr 22 16:18 newfile

Using the ls command on a newfile created for the purpose, the attributes of a (nearly) normal file are revealed. The permissions string, which we address momentarily, leads off. The '1' indicates that there is only one hard link to this file's inode (see Links, below). Then the file owner, bilbrey, and the default group for the file, users, is given. File size, modification date and time, and lastly file name, round out the majority of the information.

- r w - r - - r - -

type | owner | group | other |

Taken as a whole - the permissions string is referred to as a file's mode. The leading character of the permission string shows the file type: - indicates a normal file, d is a directory, l is a symbolic link, c and b are reserved for character and block devices, respectively. As the owner of the file, bilbrey created newfile and automatically got both read and write permission. By default, all other individuals, either in the group users, or others, have read-only access to the file. For normal files, read permission grants the boon of examining a file's contents. Write permission allows both changing and deleting files. Execute permission permits the file to be run as a program or script. The identity and group affiliations of an individual attempting to access newfile determine whether she or he is successful or not.

On the other hand, when the permissions are applied to a directory, there are some interesting effects. Write permission is clear - access to write and delete files in the directory is granted. Read permits listing the contents of the directory. Execute permission, in this context, allows access to the contents of the directory. Thus, a directory that has execute but no read permission has files that can be accessed if the exact name is known. The files in the directory may not be listed to determine a name.

[bilbrey@bobo bilbrey]$ mkdir sample17

[bilbrey@bobo bilbrey]$ ls -l sample17

drwxr-xr-x 2 bilbrey users 1024 Apr 24 00:05 sample17

Note the default permissions for creating a directory give full access to the creating user, and full read access to group and world (others).

chmod commandThe owner of a file has the ability to change a file's mode by using chmod. There are two ways of addressing the mode string for a file, either symbolically, as discussed previously, or directly by specifying the octal number, which represents permissions as a bit string. At the risk of being repetitive, let's start with symbolic mode. For this command, we'll look at examples and explanations first, to give the program a conceptual framework.

There are three types of permission: r, w and x, for read, write, and execute. There are three types of access: u, g, and o, symbolizing user, group, and other (world). The access types may be grouped with an a, standing for all. So, to change the file Script01 so that all users on the system can access it for read and write:

[bilbrey@bobo bilbrey]$ chmod a+rw Script01

[bilbrey@bobo bilbrey]$ ls -l Script01

-rw-rw-rw- 1 bilbrey users 1024 Apr 24 00:18 Script01

Then to add execute permission for members of the same group, run the following command:

[bilbrey@bobo bilbrey]$ chmod g+x Script01

Alternatively, chmod uses octal numbers (base 8, numbers 0 through 7) to set permissions. The advantage of using the numeric mode is that fine-grained permission control is available using a very brief command. Numeric mode is absolute. All of a file's permissions are set in one command.

[bilbrey@bobo bilbrey]$ chmod 755 Script01

[bilbrey@bobo bilbrey]$ ls -l Script01

-rwxr-xr-x 1 bilbrey users 1024 Apr 24 00:18 Script01

In the same manner that the decimal system uses 10 numbers, 0..9, octal representations use the numbers 0..7. In binary notation (as used by the computer), octal numbers can be represented with 3 bits.

Table 17-2

Numeric (Octal) Permissions

| Octal | Binary | Permission>|

0 |

000 | >

None |

1 |

001 | >

x |

2 |

010 | >

w |

3 |

011 | >

wx |

4 |

100 | >

r |

5 |

101 | >

rx |

6 |

110 | >

rw |

7 |

111 | >

rwx |

Octal one (001), two (010), and four (100) represent distinct bit patterns, representing execute, write, and read permission, respectively. Bear in mind that the order of access listed is user, group, and then other. So to assign user permission of read, write, and execute, and read-only access for group and other, the octal mode is 744. if you take out the 001 (binary) that represents user execute permission, use 644 for user read/write.

For files that you want to keep secret from everyone but yourself and the root user, use a permission of 600, which gives no one else any access at all.

chmod - Change file access permissions

Usage : chmod [option...] mode[,mode]... filename...

|

|

-c | --changes |

Semi-verbose - print changes only |

-f | --silent | --quiet |

Suppress most error messages |

--reference=rfile |

Make permissions like rfile's |

-R | --recursive |

Change files in specified directories recursively |

-v |--verbose |

Print message for each file or directory |

-h | --help |

Display help and exit |

-v | --version |

Show version information and exit |

filename |

File(s) for permissions modification |

Symbolic modes may be concatenated, leading to commands like the following (which illustrates why numeric mode is useful - the second line has identical consequences):

[bilbrey@bobo bilbrey]$ chmod u+rw,u-x,go+rx,go-w Chapter16.txt

[bilbrey@bobo bilbrey]$ chmod 655 Chapter16.txt

Another program that can be used for file permission modifications is chattr. chattr is specific to Linux ext2 filesystems. Learn more by typing man chattr.

chgrp commandThe chgrp program is a utility to change the group associated with a file or directory. This is often done to make specific files or subdirectories available to other users for project or departmental purposes. The root user administers groups.

After a group is created, a user needs to log out completely, then login again in order to be recognized in the new group.

chgrp - Change file group ownership

Usage : chmod [option...] group filename...

|

|

-c | --changes |

Semi-verbose - print changes only |

-f | --silent | --quiet |

Suppress most error messages |

--reference=rfile |

Make group like rfile's |

-R | --recursive |

Change files in specified directories recursively |

-v |--verbose |

Print message for file specified |

-h | --help |

Display help and exit |

-v | --version |

Show version information and exit |

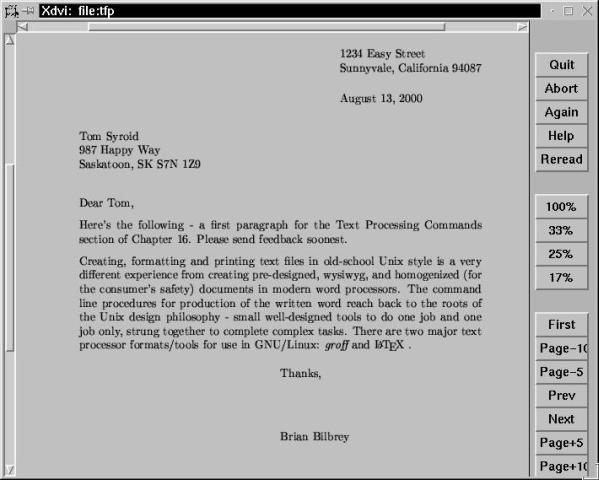

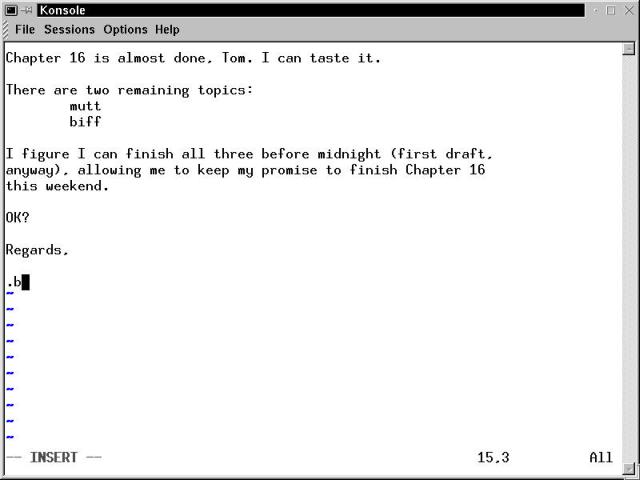

filename |